Blog

31 May 2018 / /

Trialling ARM Boards as Compute Nodes

In recent years the availability of ARM-powered computers has become commonplace: manufacturers from Raspberry Pi through to Marvell are helping power networks from Mythic Beasts (who are just one of many providers offering Pi hosting) through to Cloudflare (who are deploying ARM servers at their network edge).

When we expanded to Geneva a couple of years ago, ARM servers were a serious consideration but at the time were not quite cost-effective for us — plus we wanted to move straight to deployment rather than needing a significant R&D phase beforehand. Now, though, we’re using ARM-based equipment from a number of vendors and the potential to evaluate them for some niche purposes presented itself.

Our CRM as a service offers email delivery as one of its many features, which is a process that currently has very lumpy requirements. Typically we see a constant trickle of transactional email, and then large bursts of activity for marketing-type mailshots. We designed fulcrm’s email service around worker queues using Celery so that we could scale the required infrastructure on demand with our cloud for virtual machines. Having made many improvements to the database infrastructure of fulcrm, we then started to consider whether using a cloud of low-powered CPUs would make sense for email rendering.

Our initial tests suggested that the hardware cost could pay back in under a year while also increasing the speed of our email sending by a significant margin. It was easy to decouple the stateless process of email rendering (which requires only network I/O for queue and database access) from stateful delivery of emails by SMTP (where SMTP servers need a persistent disk for delivery retries). The only piece of the puzzle we had to solve was adjusting our automated deployment states for the new architecture.

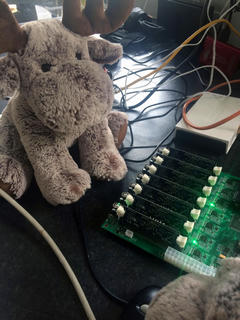

The hardware we are currently using is groups of seven SOPINE A64 single board computers fitted into the Clusterboard. This form factor gives us 28 cores of 64-bit ARM Cortex A53 power, and 14Gb of RAM, as a single physical unit with one network connection and one power connection. It is small enough to tuck into what might otherwise be wasted space in a rack effectively resulting in a 0U installation with minimal cabling requirements (one power, one network uplink).

A few small pieces of our stack were missing, notably:

- no ARM64 repository for SaltStack for Ubuntu Xenial (the image upon which our SOPINE’s operating system is based), so we rolled our own:

deb https://deb.faelix.net xenial main - no ARM64 build of MooseFS, so we compiled our own on a ROCK64 board

After some preflight checks, our first trials ran the SOPINEs in parallel with the virtual machine infrastructure. We gradually scaled down the virtual machines till they were handling none of the templating load, and in some cases the performance increased. We believe that this is because the ARM boards are always running — albeit with very low power consumption when idle — and can immediately start accepting jobs submitted to the worker queues.

The SOPINEs are now in production use, regularly rendering significant volumes of emails for our SMTP cluster to send out. As expected, the underlying hardware transition is something that is completely invisible to the end users of fulcrm and the recipients of their emails. We fully anticipate deploying some more similarly-configured clusters as we scale out horizontally.